Abstract

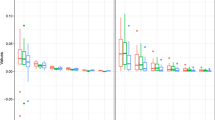

Recently, Fine and Gray (J Am Stat Assoc 94:496–509, 1999) proposed a semi-parametric proportional regression model for the subdistribution hazard function which has been used extensively for analyzing competing risks data. However, failure of model adequacy could lead to severe bias in parameter estimation, and only a limited contribution has been made to check the model assumptions. In this paper, we present a class of analytical methods and graphical approaches for checking the assumptions of Fine and Gray’s model. The proposed goodness-of-fit test procedures are based on the cumulative sums of residuals, which validate the model in three aspects: (1) proportionality of hazard ratio, (2) the linear functional form and (3) the link function. For each assumption testing, we provide a \(p\)-values and a visualized plot against the null hypothesis using a simulation-based approach. We also consider an omnibus test for overall evaluation against any model misspecification. The proposed tests perform well in simulation studies and are illustrated with two real data examples.

Similar content being viewed by others

References

Andersen PK, Borgan Ø, Gill RD, Keiding N (1993) Statistical models based on counting processes. Springer-Verlag, New York

Dickson E, Grambsch P, Fleming T, Fisher LD, Langworthy A (1989) Prognosis in primary biliary cirrhosis: model for decision making. Hepatology 10:1–7

Fine JP, Gray RJ (1999) A proportional hazards model for the subdistribution of a competing risk. J Am Stat Assoc 94:496–509

Fleming TR, Harrington DP (1991) Counting processes and survival analysis. Wiley, New York

Kim H (2007) Cumulative incidence in competing risks data and competing risks regression analysis. Clin Cancer Res 13:559–565

Lau B, Cole S, Gange S (2009) Competing risk regression models for epidemiologic data. Am J Epidemiol 170:244–256

Lin DY, Wei LJ, Ying Z (1993) Checking the Cox model with cumulative sums of martingale-based residuals. Biometrika 80:557–572

Perme M, Andersen P (2008) Checking hazard regression models using pseudo-observations. Stat Med 27(25):5309–5328

Scheike TH, Zhang MJ (2008) Flexible competing risks regression modelling and goodness-of-fit. Lifetime Data Anal 14:464–483 pMCID: PMC2715961

Scheike TH, Zhang MJ, Gerds T (2008) Predicting cumulative incidence probability by direct binomial regression. Biometrika 95:205–220 pMC Journal-In Process

Scrucca L, Santucci A, Aversa F (2007) Competing risk analysis using R: an easy guide for clinicians. Bone Marrow Transpl 40(4):381–387

Weisdorf D, Eapen M, Ruggeri A, Zhang M, Zhong X, Brunstein C, Ustun C, Rocha V, Gluckman E (2013) Alternative donor hematopoietic transplantation for patients older than 50 years with aml in first complete remission: unrelated donor and umbilical cord blood transplantation outcomes. Blood 122:302

Wolbers M, Koller M, Witteman J, Steyerberg E (2009) Prognostic models with competing risks: methods and application to coronary risk prediction. Epidemiology 20(4):555–561

Zhou B, Fine J, Laird G (2013) Goodness-of-fit test for proportional subdistribution hazards model. Stat Med 32(22):3804–3011

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Consider the following partial sums of residuals

where \(f(x,\varvec{Z}_i,\varvec{v},p)=(\varvec{v}^\mathsf T \varvec{Z}_i)^p 1\!\!1\left( \varvec{v}^\mathsf T \varvec{Z}_i\le x\right) \). Here \(\varvec{v}\) is a vector with same dimension of covariates \(\varvec{Z}\), and \(p=0\) or \(1\). Under the null hypothesis that the FG model is valid,

Taking the Taylor expansion of \(\varvec{U}(\hat{\varvec{\beta }},t)\) at \(\varvec{\beta }_0\), we can obtain

where \(\varvec{\varOmega }=\lim _{n\rightarrow \infty }\varvec{I}(\varvec{\beta }_0)/n\) and asymptotically \(\varvec{U}(\varvec{\beta }_0)\) can be expressed as the sum of \(n\) independent and identically distributed random variables, i.e. \(n^{-1/2}\varvec{U}(\varvec{\beta }_0)=n^{-1/2}\sum _{i=1}^n(\varvec{\eta _i}+\varvec{\psi _i})\,{+}\,o_p(1)\) (for explicit expressions of \(\varvec{\eta _i}\) and \(\varvec{\psi _i}\) see Fine and Gray (1999)). Since \(\varvec{\eta _i}\) contributes the majority of the variability, so we call \(\varvec{\eta _i}\) major term and \(\varvec{\psi _i}\) minor term. Both \(\varvec{\eta _i}\) and \(\varvec{\psi _i}\) are zero-mean Gaussian processes. Therefore

Recall that

therefore

Further more, for (11), taking the Taylor expansion of \(1/S_0(\hat{\varvec{\beta }},u)\) at \(\varvec{\beta }_0\), we can obtain

For (12),

where

Plug (11) and (12) into (9) we have

Under the asymptotic regularity conditions:

Exchange summation on (14) and combine with (7). When \(n\mathop {\rightarrow }\limits ^{p}\infty \),

where

Combine (8) and (15). When \(n\mathop {\rightarrow }\limits ^{p}\infty \),

where

Exchange summation on (16). When \(n\mathop {\rightarrow }\limits ^{p}\infty \),

where

So

where

which can be consistently estimated by the plug-in estimators.

1.1 Test proportional subdistribution hazards assumption:

To check the proportional subdistribution hazards assumption, we consider the score process \(U_j(\hat{\varvec{\beta }},t)\) for each covariate, which can be written as

It is a special case of the general form \(\varvec{B}(t,x)\) with \(x=\infty \), \(p=1\) and \(\varvec{v}\) has \(1\) in \(j\)th element and \(0\) elsewhere. Under the null hypothesis,

where \(s_{1j}(\varvec{\beta }_0,u)\) is the \(j\)th element of \(\varvec{s}_1(\varvec{\beta },u)\) and

In practice, we standardized the process by multiplying \(I_{jj}^{-1}(\hat{\varvec{\beta }})\), and denoted \(B^{(p)}_j(t)=I_{jj}^{-1}(\hat{\varvec{\beta }})U_j(\hat{\varvec{\beta }},t)\) in the text.

1.2 Test linear functional form

To test the linear functional form for the \(j\)th covariate, we consider

which is a special case of the general form \(\varvec{B}(t,x)\) when \(t=\infty \), \(p=0\), and \(\varvec{v}\) has \(1\) in \(j\)th element and \(0\) elsewhere. Under the null hypothesis,

where

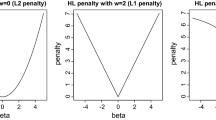

1.3 Test link function

To test the link function, we consider

In this case, \(t=\infty \), \(p=0\) and \(\varvec{v}=\hat{\varvec{\beta }}\). Under the null hypothesis, the test statistic has exactly the same format as the test statistic in the linear functional form, except the indictor function is replaced by \(1\!\!1\left\{ \hat{\varvec{\beta }}^\mathsf{T } \varvec{Z}_{i} \le x\right\} \). Note, if there is only one covariate, checking the function is equivalent to checking the functional form of the covariate.

1.4 Omnibus test

Here we consider

For the \(j\)th covariate is of interest for testing, the \(j\)th element in \(\varvec{B}^{(o)}(t,x)\) is the statistic we look for,

which is a special case of the general form \(\varvec{B}(t,x)\) when \(p=0\) and and \(\varvec{v}\) has \(1\) in \(j\)th element and \(0\) elsewhere. The omnibus test also can be viewed as recording each linear function test through the time span. Under the null hypothesis,

where

Rights and permissions

About this article

Cite this article

Li, J., Scheike, T.H. & Zhang, MJ. Checking Fine and Gray subdistribution hazards model with cumulative sums of residuals. Lifetime Data Anal 21, 197–217 (2015). https://doi.org/10.1007/s10985-014-9313-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10985-014-9313-9