Abstract

Objectives

Discrete choice experiments (DCEs) are increasingly advocated as a way to quantify preferences for health. However, increasing support does not necessarily result in increasing quality. Although specific reviews have been conducted in certain contexts, there exists no recent description of the general state of the science of health-related DCEs. The aim of this paper was to update prior reviews (1990–2012), to identify all health-related DCEs and to provide a description of trends, current practice and future challenges.

Methods

A systematic literature review was conducted to identify health-related empirical DCEs published between 2013 and 2017. The search strategy and data extraction replicated prior reviews to allow the reporting of trends, although additional extraction fields were incorporated.

Results

Of the 7877 abstracts generated, 301 studies met the inclusion criteria and underwent data extraction. In general, the total number of DCEs per year continued to increase, with broader areas of application and increased geographic scope. Studies reported using more sophisticated designs (e.g. D-efficient) with associated software (e.g. Ngene). The trend towards using more sophisticated econometric models also continued. However, many studies presented sophisticated methods with insufficient detail. Qualitative research methods continued to be a popular approach for identifying attributes and levels.

Conclusions

The use of empirical DCEs in health economics continues to grow. However, inadequate reporting of methodological details inhibits quality assessment. This may reduce decision-makers’ confidence in results and their ability to act on the findings. How and when to integrate health-related DCE outcomes into decision-making remains an important area for future research.

Similar content being viewed by others

Quantifying preferences for healthcare is becoming increasingly popular; however, there exists no recent description of how health-related discrete choice experiments (DCEs) are being employed. |

This study identified changes in experimental design, analytical methods, validity tests, qualitative methods and outcome measures over the last 5 years. |

To facilitate quality assessment and better integration into health decision-making, future DCE reports should include more complete information, which might be achieved by developing reporting guidelines specifically for DCEs. |

1 Introduction

In recent years, there have been increased calls for patient and public involvement in healthcare decision-making [1, 2]. Patient or public involvement can support decision-making at multiple levels: individual (shared decision-making), policy (patient experts on panels) and commissioning (incorporating patient preferences in technology evaluations or health state valuation). Views can be elicited qualitatively, quantitively or using mixed-methods approaches [3]. Example methods include interviews, focus groups and stated preference techniques such as the standard gamble or time trade-off. Studies by the Medical Device Innovation Consortium (MDIC) [4] and Mahieu et al. [5] highlighted a wide variety of methods to measure both stated and revealed preferences in healthcare.

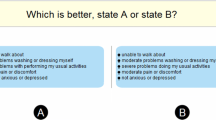

Among the quantitative methods for eliciting stated health preferences, discrete choice experiments (DCEs) are increasingly advocated [6]. In a DCE individuals are asked to select their preferred (and/or least preferred) alternative from a set of alternatives. DCEs are grounded in theories which assume that (1) alternatives can be described by their attributes, (2) an individual’s valuation depends upon the levels of these attributes, and (3) choices are based on a latent utility function [7,8,9,10]. The theoretical foundations have implications for the experimental design (principles to construct alternatives and choice sets) and the probabilistic models used to analyse the choice data [7].

Previously conducted broad reviews by Ryan and Gerard (1990–2000) [11], de Bekker-Grob et al. (2001–2008) [7] and Clark et al. (2009–2012) [6] identified a number of methodological challenges of DCEs (e.g. how to choose among orthogonal, D-efficient and other designs or how to account for preference heterogeneity when analysing choice data). These reviews, as well as published checklists [12] and best-practice guidelines [13,14,15,16,17], have been developed to provide specific guidance and potentially improve quality [12, 18]. However, it is unknown whether the challenges identified in prior reviews are still relevant or whether there has been a response to the published suggestions and guidelines. Furthermore, although health-related DCEs are increasingly advocated by organisations such as the MDIC [4], their use for actual decision-making in health remains limited [7, 13]. Key barriers to their wider use in policy include concerns about the robustness and validity of the method and the quality of applied studies [19, 20].

This paper seeks to provide a current overview of the applications and methods used by DCEs in health economics. This overview will be created by systematically reviewing DCE literature and extracting data from the period 2013–2017. In addition, historical trends in experimental design, analytical methods, validation procedures and outcome measures will be described by comparing the results to those of prior reviews. For the sake of generality and to allow examination of trends based on consistent data extraction methods, this comparison will focus on the broad reviews cited above, rather than on narrower reviews of DCEs covering specific study designs or disease areas [21,22,23,24,25,26,27,28,29,30,31,32,33,34,35,36,37,41]. Recent developments in DCE methods will be incorporated by including new data elements not reported in previous reviews. Potential challenges and recommendations for future research will also be identified.

2 Methods

The current systematic review continued the work conducted in the prior broad DCE reviews [6, 7, 11] by focusing on DCEFootnote 1 applications published between 2013 and 2017. The methodology for this systematic review built on that of the prior reviews to allow comparison of results across review periods and identification of trends. The search was initiated in May 2015 and updated in February 2016 and January 2018. We used the same search engine (PubMed) that was used in the latest review by Clark et al. [6] and generally used the same search terms. We decided to exclude the search terms ‘conjoint’ and ‘dce’, since these yielded too many irrelevant results (particularly due to the rise of dynamic contrast-enhanced imaging in gene expression profiling) and would have substantially increased the number of abstracts to be reviewed. The final search terms included ‘discrete choice experiment’, ‘discrete choice experiments’, ‘discrete choice modeling’, ‘discrete choice modelling’, ‘discrete choice conjoint experiment’, ‘stated preference’, ‘part-worth utilities’, ‘functional measurement’, ‘paired comparisons’, ‘pairwise choices’, ‘conjoint analysis’, ‘conjoint measurement’, ‘conjoint studies’, ‘conjoint choice experiment’ and ‘conjoint choice experiments’. A study was included if it was applied to health, included a discrete choice exercise (rather than rating or ranking), focused on human beings and was published as a full-text article in English between January 2013 and December 2017. Consistent with prior reviews, DCEs without empirical data (e.g. methodological studies) and studies of samples already included in our review were excluded.

To ensure consistency of data extraction and assist with synthesis of results, the authors used an extraction tool, available in Appendix A of the Electronic Supplementary Material, initially developed using the criteria of Clark et al. [6]. We first considered areas of application (e.g. patient consumer experience, valuing health outcomes) and background information (country of origin, number and type of attributes, number of choice sets, survey administration method), followed by more detailed information about the experimental design (type, plan, use of blocking, design software, design source, method used to create choice sets, number of alternatives, presence of an opt-out or status quo option, sample size and type), data analysis (model, analysis software, model details), validity checks (external and internal), use of qualitative methods (type and rationale) and presented outcome measures. The authors tested the extraction tool and discussed initial results. To fully capture current DCE design methods, the following data elements were added to the original data extraction tool: number of alternatives, presence of an opt-out or status quo, sample size, use of blocking, use of a Bayesian design approach, software for econometric analyses and the type of qualitative research methods reported. With regard to analysis methods, this review also extracted additional information on the use of scale-adjusted latent class, heteroskedastic conditional logit and generalised multinomial models. Studies were also categorised by journal type.

Each author extracted data from a group of articles, checking online appendices and supplementary materials where relevant. A subsample of studies (20%) was double-checked by V.S. for quality control. We categorised the extracted data and reported the results as percentages. Results for the econometric analysis models were categorised based on the three key characteristics of the multinomial logit model (Fig. 1): (1) the assumption that error terms are independent and identically distributed (IID) according to the extreme value type I distribution, (2) independence of irrelevant alternatives (IIA) (resulting from the first characteristic) and (3) the presence or absence of preference heterogeneity [7]. The IID characteristic limits flexibility in estimating the error variance, whereas IIA is about the flexibility of the substitution pattern (how flexible respondents are to substitute between choices), and assumptions about preference heterogeneity determine whether preferences are allowed to vary across respondents.

3 Results

3.1 Search Results

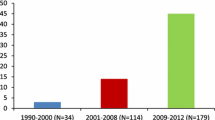

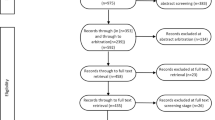

A total of 7877 abstracts were identified from the beginning of 2013 until the end of 2017. After abstract and full-text review, 301 DCEs (including six case 3 best–worst scaling [BWS] studies) met the inclusion criteria and were selected for data extraction (see Fig. 2) [43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159,160,161,162,163,164,165,166,167,168,169,170,171,172,173,174,175,176,177,178,179,180,181,182,183,184,185,186,187,188,189,190,191,192,193,194,195,196,197,198,199,200,201,202,203,204,205,206,207,208,209,210,211,212,213,214,215,216,217,218,219,220,221,222,223,224,225,226,227,228,229,230,231,232,233,234,235,236,237,238,239,240,241,242,243,244,245,246,247,248,249,250,251,252,253,254,255,256,257,258,259,260,261,262,263,264,265,266,267,268,269,270,271,272,273,274,275,276,277,278,279,280,281,282,283,284,285,286,287,288,289,290,291,292,293,294,295,296,297,298,299,300,301,302,303,304,305,306,307,308,309,310,311,312,313,314,315,316,317,318,319,320,321,322,323,324,325,326,327,328,329,330,331,332,333,334,335,336,337,338,339,340,341,342,343]. Figure 3 depicts the total number of DCE applications in health across the different review periods: 1990–2000, 2001–2008, 2009–2012 and 2013–2017. The 2009–2012 review reported that the number of studies had increased to 45 per year on average [6]. The current review period found 60 studies per year on average, with a high of 98 studies in 2015 and a low of 32 studies in 2017 (Fig. 3). Figure 3 also shows that the increase in DCE applications between the prior review periods and the current review period was less consistent than the increases observed in prior periods.

3.2 Areas of Application

Prior reviews mentioned that although DCEs were originally introduced in health economics to value patient or consumer experience, the use of DCEs has broadened considerably [6, 344]. Table 1 summarises information about the different areas of application of DCEs for each review period (Appendix B of the Electronic Supplementary Material contains figures based on the tables in this review). Compared to the latest review period, the largest overall shifts occurred in the areas of patient consumer experience (category A), trade-offs between health outcomes and patient or consumer experience factors (category C), and health professionals’ preferences for treatment or screening (category G). In the current review period, 8% of studies valued health outcomes such as ‘heart attacks avoided’ (category B, 23 studies, e.g. studies [148, 152, 153, 162, 170]), 4% estimated utility weights within the quality-adjusted life year (QALY) framework (category D, 13 studies, e.g. [218, 226,227,228, 230]), 6% focused on job choices (category E, 17 studies, e.g. [231, 236, 238, 242, 247]), and 9% developed priority-setting frameworks (category F, 27 studies, e.g. [248, 253, 270, 272, 274]).

Among the DCEs reviewed, the most common journal focus was health services research (n = 139; 46%). About a third (n = 102; 34%) of articles were published in specialty-focused medical journals such as Vaccine (five studies [66, 131, 146, 311, 313]) or the British Journal of Cancer (three studies [47, 70, 171]). Fifty-one (17%) were published in general medical journals such as PLoS One (20 studies, e.g. [44, 64, 81, 91, 99]) and BMJ Open (five studies [100, 102, 109, 169, 264]). More details can be found in Appendix C of the Electronic Supplementary Material.

3.3 Background Information About DCEs

The reviews from Ryan and Gerard [11], de Bekker-Grob et al. [7] and Clark et al. [6] provided detailed information about study characteristics. Information for the current review period is described in the sections below. Table 2 parts (a) and (b) report the current information alongside data from the prior reviews.

3.3.1 Country of Origin

Table 2a shows that UK-based studies made up a relatively high proportion of published DCEs (17%, 50 studies), as did studies from the US (17%, 50 studies), Australia (10%, 30 studies), the Netherlands (15%, 44 studies), Germany (9%, 28 studies) and Canada (8%, 25 studies). DCEs were also popular in other European countries, for example, Italy (3%, eight studies) and Sweden (2%, six studies) (not shown). We also observed an increase in studies coming from ‘other’ countries, from 0% to 34% across the four review periods, which reflects an upwards trend towards applying DCEs in middle- and low-income countries (e.g. Cameroon [239], Ghana [244], Laos [232], Malawi [254] and Vietnam [122]).

3.3.2 Attributes, Choices and Survey

In the current review period, the number of attributes per alternative in DCEs ranged from two to 21, with a median of five. We observed a slight decrease in number of attributes; the modal category was 4–5 (39%, 117 studies). In line with prior reviews, most studies (82%, 247 studies) included four to nine attributes. For the period 2013–2017, most studies included a monetary (50%, 150 studies), time-related (39%, 117 studies), or risk-related (44%, 133 studies) attribute. The proportion of studies including time-related and health status (24%, 71 studies) attributes decreased.

Most DCEs in the current period included nine to 16 choices per individual (54%, 162 studies), with a median of 12 (minimum 1, maximum 32). Prior reviews mentioned increases in online administration of DCEs. This trend continued in the current review period, with 57% of the DCEs conducted online (172 studies), whereas the number of DCEs which used pencil and paper dropped to 23% (69 studies). These self-completed DCEs remained the main source of survey administration.

3.3.3 Alternatives and Sample

Prior reviews did not collect data about the number of alternatives included in each DCE or whether an opt-out or status quo option was included. For the current period, most of the studies (83%, 251 studies) included two alternatives (not including any opt-out or status quo option), with 8% (23 studies) not clearly reporting the number of included alternatives (Table 2b). The majority of the studies (64%, 194 studies) did not include an opt-out or status quo option.

The prior reviews covering the period 1990–2012 did not extract data about the sample size. In the current period, the mean and median sample size were 728 and 401, respectively. Sample size ranged from a minimum of 35 [116] to a maximum of 30,600 respondents [148]. Most of the samples included patients (37%, 110 studies) or the general public (27%, 81 studies). A large number of DCEs sampled ‘other’ populations (31%, 93 studies) such as healthcare workers, healthcare students or a mixture of these.

3.4 Experimental Design

Experimental design (planning of the alternatives and choice sets) is crucial to the conduct of a DCE. The review from de Bekker-Grob et al. [7] describes DCE design in detail. For more information about the choices researchers have to make when designing the experimental part of a DCE, we also refer to a key checklist and best practice example [14, 15].

3.4.1 Design Type, Design Plan and Blocking

As in prior review periods, most DCEs made use of a fractional design (89%, 269 studies) (Table 3). Additionally, we observed that for the current review period, the design plan of DCEs most frequently focused on main effects only (29%, 86 studies). This is a decrease compared to the periods 1990–2000, 2001–2008 and 2009–2012, with 74%, 89% and 55%, respectively. The percentage of DCEs not clearly reporting design plan information increased to 49% (147 studies) for 2013–2017. When generating the experimental design, blocking, creating different versions of the experiment for different respondent groups, can be used to reduce the cognitive burden of respondents by reducing the total number of choices per respondent [345]. Reviews for the period 1990–2012 did not collect information about blocking. Data for the current period showed that 50% (150 studies) reported using blocking when generating the experimental design. On average, studies with blocking had 709 participants, each of whom completed 11 choice sets, whereas studies with unblocked designs had 439 participants, each of whom completed 13 choice sets.

3.4.2 Design Software

Ngene became the most popular software tool in the current period for generating experimental designs (21%, 62 studies, e.g. [53, 63, 139, 268, 319]). SAS (18%, 54 studies, e.g. [262, 290, 296, 300, 316]) and Sawtooth (16%, 47 studies, e.g. [46, 141, 207, 276, 323]) remained popular tools. Compared to prior review periods, we observed an increase in the percentage of studies not clearly indicating what software was used to generate the experimental design (33%, 99 studies, e.g. [44, 144, 177, 204, 299]).

3.4.3 Methods to Create Choice Sets

The upwards trend in the use of D-efficient (35%, 105 studies) experimental designs continued in the current review period. Correspondingly, fewer DCEs used orthogonal arrays through methods such as single profiles, random pairing or the foldover technique (Table 3). As with the experimental design characteristics mentioned in the previous sections, we observed that an increasing number of studies (33%, 100 studies in 2013–2017) did not clearly report the methods used to create choice sets.

3.5 Econometric Analysis Methods

Information about the different econometric analysis methods and the appropriateness of these methods for different DCE applications is described in great detail in the prior reviews [6, 7, 11]. More information can be found in papers by Louviere and Lancsar [12], Bridges et al. [14] and Hauber et al. [17]. Table 4 parts (a) and (b) summarise information about econometric analyses from the current and prior review periods.

3.5.1 Econometric Analysis Model, Software and Preference Heterogeneity

We present information about econometric analysis models according to the taxonomy described in the “Methods” section and visualised in Fig. 1. Reviews for the periods 1990–2000 and 2001–2008 reported that most DCEs used random-effects (random-intercept) probit models to analyse preference data (53% and 41%, respectively). The review for the period 2009–2012 showed a shift to the use of other methods like multinomial logit models (45%) and mixed (random-parameter) logit models (25%). For the current review period, this trend continued (see Table 4a). Most DCEs in 2013–2017 reported the use of mixed logit models (39%, 118 studies, e.g. [47, 271, 301, 314, 318]) or multinomial logit models (39%, 116 studies, e.g. [92, 110, 166, 294, 339]) to analyse preference data. The current review period also showed an increase in the use of latent class models (12%, 36 studies, e.g. [38, 91, 139, 165, 269]) and other econometric analysis models. Examples include generalised multinomial logit (4%, 12 studies, e.g. [97, 124, 157, 174, 240]) and heteroskedastic multinomial logit (4%, 11 studies, e.g. [134, 139, 184, 256, 309]).

Prior reviews did not collect data about the software used for econometric analysis. For the current review period, Table 4b shows that most DCEs made use of Stata (31%, 94 studies, e.g. [91, 110, 138, 149, 213]) or Nlogit (22%, 65 studies, e.g. [94, 171, 204, 282, 346]) to conduct econometric analysis. However, 26% (79 studies, e.g. [101, 184, 211, 231, 330]) did not clearly report information about the software used.

Among the studies that used mixed logit models to account for preference heterogeneity in the period 2013–2017, 22% (65 studies) included additional information about the distributional assumptions used to conduct the mixed logit analysis and the number of distributional draws (e.g. Halton draws) used to simulate preference heterogeneity. This percentage is similar to the percentage for the period 2009–2012, which was 21%. The mean number of draws for the current review period was 1354 (median 1000, minimum 50, maximum 10,000), and 18% of the DCEs (53 studies) assumed that parameters followed the normal distribution.

3.6 Validity Checks and Qualitative Methods

DCEs are based on responses to hypothetical choices (stated preferences), so internal and external validity checks provide a crucial opportunity to assess data quality or to compare stated preferences from DCEs with revealed preferences. As Clark et al. [6] observed in their review, there is often little reported about the tests for external validity, possibly because validating hypothetical choice scenarios is difficult [347]. Perhaps for this reason, the review covering the period 1990–2000 did not extract specific information about external validity tests. In the reviews from 2001–2012, only a very small proportion (1%) of the DCEs reported any details about their investigations into external validity. The current review period showed that 2% (seven studies [55, 93, 147, 184, 185, 195, 248]) reported using external validity tests (Table 5).

For detailed information about the different internal validity tests, we refer to the prior review papers [6, 7, 11]. In the current review period, the percentage of studies that included internal validity checks ranged from a maximum of 17% (50 studies) for non-satiation checks to 6% (18 studies) for internal compensatory checks. Internal compensatory checks were reported less frequently than in earlier review periods. For the current review period, ‘other’ validity checks such as tests for theoretical and face validity and consistency were used frequently (34%, 102 studies).

Another way to enhance quality in a DCE is to complement the quantitative study with qualitative methods [35]. For the current review period, 86% (258) of the DCEs used qualitative methods to enhance the process and/or results. Most DCEs used interviews (50%, 151 studies) or focus group techniques (18%, 54 studies). Qualitative methods were usually used to inform attribute (53%, 160 studies) and/or level (44%, 134 studies) selection, which follows the overall upwards trend reported in prior reviews. The proportion of DCEs using qualitative methods for questionnaire pre-testing (38%, 113 studies) was similar to the level in the previous review period. Overall, just as in the previous review periods, few studies in the current review period (4%, 12 studies) used qualitative methods to improve the understanding of results/responses.

3.7 Outcome Measures

Information about the trends regarding the presented outcome measures is presented in Table 6.

As mentioned in prior reviews, DCEs often presented their outcomes in terms of willingness to pay (WTP), a monetary welfare measure or a utility score [6, 7, 11]. Use of these methods has declined over the past two review periods (2001–2012), and use of utility scores decreased from 24% to 8% over the past three periods (1990–2012). Relative to the previous period, we observed increases in the use of utility scores (17%, 50 studies, e.g. [61, 128, 141, 164, 317]), odds ratios (10%, 30 studies, e.g. [80, 146, 200, 234, 280]) and probability scores (13%, 38 studies, e.g. [122, 154, 198, 272, 277]). We also collected information about willingness-to-accept (WTA) measures (4%, 13 studies, e.g. [53, 94, 250, 322, 338]) and regression coefficients (56%, 169 studies, e.g. [44, 57, 231, 244, 276]), which were not collected in previous reviews. The proportion of studies with ‘other’ outcome measures remained near one half (49%, 147 studies, e.g. [48, 87, 114, 207, 273]). Examples from this category include (predicted) choice shares, maximum acceptable risk, relative importance and ranking.

4 Discussion

In this study, we reviewed DCEs published between 2013 and 2017. We followed the methods of prior reviews and compared our extraction results to those reviews to identify trends. We identified that DCEs have continued to increase in number and have been undertaken in more and more countries. Studies reported using more sophisticated designs with associated software, for example, D-efficient designs generated using Ngene. The trend towards the use of more sophisticated econometric models has also continued. However, many studies presented sophisticated methods with insufficient detail. For example, we were not able to check whether the results had the correct interpretation or whether the authors had conducted the appropriate diagnostics (e.g. checked that the data possessed the IIA characteristic). Qualitative methods have continued to be popular as an approach to select attributes and levels, which might improve validity. In this study, we also extracted data in several new categories, for example, sample size and type, the use of blocking, software used for econometric analysis and type of qualitative method used. We observed that the mean and median sample size were 728 and 401, respectively, with most samples including patients. We also observed that half of the studies used blocking and most studies used Stata for econometric analysis. Interviewing was the most popular qualitative research method used alongside DCEs.

The observed increase in the total number of DCEs in health economics was similar to the trend reported in prior reviews [6, 7, 11], but less consistent from year to year (Fig. 3). This less consistent increase might be explained by the presence of many competing stated preference methods [4, 5, 347]. We hypothesise that other methods may be increasing in popularity or becoming more useful in health settings [348]. Examples of such methods may include BWS case 1 and case 2 [349,350,351], which were not included in this review. Additionally, in this review, we excluded a significant number of studies (n = 31) making methodological considerations about DCEs rather than conducting empirical research. The presence of such studies may indicate that knowledge about DCEs in health has increased and there is more focus on studies to develop the method. Examples include simulation studies about experimental design, studies comparing the outcomes of a DCE to other stated preference method outcomes and studies examining different model specifications [352,353,354]. This might be another explanation for the less consistent increase in DCE application studies.

The common use of fractional designs, as described in prior reviews [6, 7], has continued. This review also found that main effects DCEs continue to dominate; however, there is a downwards trend as DCE designs incorporate two-way interactions more often. This is in line with the recommendations of Louviere and Lancsar [12], who suggest inclusion of interaction terms should be explored in the experimental design stage. Ngene became the most popular software tool in the current review period for generating experimental designs, while D-efficient designs became the most popular method to create choice sets. Perhaps as a consequence of the rise in software-generated designs, this review also showed that an increasing percentage of articles did not include information about experimental design features such as the design plan. Omitting this type of information might inhibit quality assessment and reduce confidence in the results. Future research might focus on the specific reasons why such information is missing and the impact of the missing information on quality assessment of DCEs. One potential reason for omitting methodological details is the journal word limit. When confronted with a low word limit, authors should consider using online space to report additional design and analysis details.

In addition to these observations about the generation of experimental designs, we identified design information that would be helpful to report in DCEs and future systematic reviews. For example, prior reviews did not include information about blocking, and although at least half of the DCEs we reviewed used blocking, 30% of the studies we reviewed did not include information about blocking. Blocking could be an important technique in light of the growing literature about the cognitive burden of DCEs and the impact of this cognitive burden on respondent outcomes [345]. However, blocking also has the disadvantage of requiring a larger sample size [345]. The approach described by Sándor and Wedel [355] might be another alternative to increase the validity of DCE outcomes in case of relatively small sample sizes or the investigation of preference heterogeneity.

Prior reviews identified a shift to more flexible econometric analysis models [6, 7], which is not necessarily positive. This trend has continued in this review. Most studies included multinomial logit or mixed logit models. Although we did not formally extract information about variance estimation, we noted that among the DCEs using multinomial logit models to analyse choice data, few reported robust or Huber-White standard errors (most studies reported ‘regular’ standard errors). Since these standard errors allow for more flexible substitution patterns and flexible variances, it is common in economics and econometrics to report these standard errors instead of ‘regular’ standard errors [356]. Also, in the presence of repeated observations from the same individuals, conventional standard errors are biased downward [357]. Thus, future DCEs in health economics could benefit from more appropriate treatment of clustered data (i.e. use of robust standard errors) and more complete reporting of econometric output.

In terms of analytical methods, we also observed some patterns in the exploration of preference and scale heterogeneity. We noted that, among the 39% of studies that used a mixed logit model, many treated heterogeneity as a nuisance, i.e. they used the mixed model to accommodate repeated measures but did not report additional information about the ‘mixed’ aspect of the data (e.g. standard deviation estimates). Since preference heterogeneity is regarded as an important aspect within choice modelling, taking full advantage of the modelling results might help us understand preference heterogeneity better [358]. With regard to scale heterogeneity, work by Fiebig et al. [346] indicated that other models such as the generalised multinomial logit and heteroskedastic multinomial logit models could be considered when analysing DCE data, to identify differences in scale when comparing preferences between groups of respondents [359]. Data from this review identified a small number of DCEs using such methods; for a more detailed breakdown, we refer readers to another review focussing on scale heterogeneity specifically [30]. However, it is important to mention that the generalised multinomial logit model should be used with caution since the ability of this model to capture scale heterogeneity has been questioned in the literature [360].

Articles by Vass and Payne [19] and Mott [20] describe issues influencing the degree to which DCE findings are used in healthcare decision-making (e.g. health-state valuation and health technology assessment). These articles, rising popularity of the method, and interest from regulators and funders suggest that DCEs could play an important role in real-world decision-making [361, 362]. However, concerns have been expressed about the validity, reliability, robustness and generalisability of DCEs [11, 363]. A key stage in understanding the robustness of DCEs is understanding whether stated preferences reflect ‘true’ preferences as revealed in the market [10]. In this study, we observed that the number of studies testing external validity remained small. Future research should focus on identifying and resolving the methodological and practical challenges involved in validity testing, and on guiding the incorporation of DCEs into actual decision-making in healthcare. Another practice that may improve the robustness of DCEs and facilitate their use in healthcare decision-making is the increased use of qualitative methods to complement quantitative DCE analysis [363]. Prior reviews and additional literature suggest that qualitative research methods can strengthen DCEs and other quantitative methods by facilitating numerous investigations such as (1) identification of relevant attributes and levels, (2) verification that respondents understand the presented information, and (3) learning about respondents’ decision strategies [6, 7, 11, 364]. These investigations can help determine whether respondents are making choices in line with the underpinning utility theories, thereby supporting the legitimacy of the underlying assumptions. This review showed an overall upwards trend in the number of DCEs using qualitative methods to select attributes and levels. This move towards a more mixed-methods approach has been observed by others, for example, the study by Ikenwilo et al. [365].

4.1 Strengths and Limitations

The current study has several strengths. First, the detailed data extraction was completed by each author individually, with the total number of articles approximately divided equally among authors because of the relative short timeframe and the need to balance author burden with study quality. Additionally, a subsample of studies (20%) was double-checked by one author (V.S.) for quality control, which enhanced reliability. Second, this study identified trends in empirical DCEs by comparing outcomes from all prior reviews. Additionally, this study included aspects of empirical DCEs not investigated before, although these aspects were recognised in the literature as becoming more important in DCE research (e.g. blocking in experimental design and the type of qualitative methods used in a DCE). Third, our observation of less rapid growth in the number of empirical DCEs (compared to the growth observed in previous reviews) matches the trend in the preference research to focus on the broad range of stated preference methods available (rather than DCEs exclusively) [4, 5, 347].

A potential weakness of this study was the use of multiple reviewers with potentially different interpretations of DCE reports, which might have affected the data extraction and, as a consequence, the results presented. To limit inconsistency between reviewers, all co-authors discussed the data extraction frequently and results were cross-validated by a single author (V.S.). Similarly, this inconsistency in interpretation may also have occurred between the different review periods. Procedural information from the two most recent reviews was used to ensure consistency, and we are therefore confident the general trends reported and the conclusion that more detailed methods reporting is called for holds. Another potential weakness is the use of only one database (PubMed). However, like the authors of the prior reviews [6, 7], we do not expect the review findings to be significantly different when performing searches on other databases. Also, since we were interested in identifying trends and therefore maximising comparability between the different reviews, we preferred to restrict our searches to this single database. As with many systematic reviews, data were extracted from published manuscripts and online appendices. The results are therefore reliant on what was reported in the final article and do not necessarily reflect all activities of the authors. Trends presented could therefore reflect factors such as publication bias, journal scope, editor preferences, and word limits, as well as preferences of journal editors rather than actual practice. Additionally, although we did update the data extraction tool based on changes in the field, future research might benefit from updating other aspects of the systematic review protocol such as search terms and inclusion and exclusion criteria (e.g. inclusion of best–best scaling). Finally, although we believe that DCEs are both useful and common enough to deserve focused attention in this review, DCEs represent one method among many for examining health preferences, and other methods may be preferable depending on the circumstances [4].

5 Conclusion

This study provides an overview of the applications and methods used by DCEs in health. The use of empirical DCEs in health economics has continued to grow, as have the areas of application and the geographic scope. This study identified changes in the experimental design (e.g. more frequent use of D-efficient designs), analysis methods (e.g. mixed logit models most frequently used), validity enhancement (e.g. more diverse use of internal validity checks), qualitative methods (e.g. upwards trend of qualitative methods used for attribute and level selection) and outcome measures (e.g. coefficients most frequently used). However, a large number of studies not reporting methodological details were also identified. DCEs should include more complete information, for example, information about design generation, blocking, model specification, random-parameter estimation and model results. Developing reporting guidelines specifically for DCEs might positively impact quality assessment, increase confidence in the results and improve the ability of decision-makers to act on the results. How and when to integrate health-related DCE outcomes into decision-making remains an important area for future research.

Notes

In this review, best–worst scaling (BWS) case 1 and 2 are distinguished from case 3. Since case 1 and 2 BWS do not involve attribute-based comparisons between two or more alternatives, they were excluded from this review [42], consistent with the previous review [6]. Case 3 BWS, however, involves an attribute-based comparison between two or more alternatives and is considered an extension of DCEs in the literature [367, 42]. Therefore, case 3 BWS applications were included in this review.

References

Hogg CN. Patient and public involvement: what next for the NHS? Health Expect. 2007;10(2):129–38.

Mockford C, Staniszewska S, Griffiths F, Herron-Marx S. The impact of patient and public involvement on UK NHS health care: a systematic review. Int J Qual Health Care. 2012;24(1):28–38. https://doi.org/10.1093/intqhc/mzr066.

Soekhai V, Whichello C, Levitan B, Veldwijk J, Hammad T, Kihlbom U, et al. Compendium of methods for measuring patient preferences in medical treatment. Value Health. 2017;20(9):A684–5.

MDIC. Medical Device Innovation Consortium (MDIC): patient centered risk-benefit project report. [Internet]. 2015. http://mdic.org/wpcontent/uploads/2015/05/MDIC_PCBR_Framework_Web1.pdf. Accessed 29 June 2018.

Mahieu PA, Andersson H, Beaumais O, dit Sourd RC, Hess S, Wolff F. Stated preferences: a unique database composed of 1,657 recent published articles in journals related to agriculture, environment or health. Rev Agric Food Environ Stud. 2017;98(3):201–20.

Clark MD, Determann D, Petrou S, Moro D, de Bekker-Grob EW. Discrete choice experiments in health economics: a review of the literature. Pharmacoeconomics [Internet]. 2014. [cited 2018 Jun 18];32:883–902. http://www.ncbi.nlm.nih.gov/pubmed/25005924.

De Bekker-Grob EW, Ryan M, Gerard K. Discrete choice experiments in health economics: a review of the literature. Health Econ [Internet]. 2012. Wiley-Blackwell; 2012 [cited 2018 Jun 18];21:145–72. http://doi.wiley.com/10.1002/hec.1697.

Lancaster KJ. A new approach to consumer theory. J Polit Econ [Internet]. The University of Chicago Press. 1966. [cited 2018 Jun 18];74:132–57. http://www.journals.uchicago.edu/doi/10.1086/259131.

Mandeville KL, Lagarde M, Hanson K. The use of discrete choice experiments to inform health workforce policy: a systematic review. BMC Health Serv Res [Internet]. 2014. [cited 2018 Jun 18];14. https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4161911/pdf/12913_2014_Article_3466.pdf.

Thurstone LL. A law of comparative judgment. Psychol Rev [Internet]. 1927. [cited 2018 Jul 8];34:273–86. http://content.apa.org/journals/rev/34/4/273.

Ryan M, Gerard K. Using discrete choice experiments to value health care programmes: current practice and future research reflections. Appl Heal Econ Heal Policy [Internet]. 2003. [cited 2018 Jun 18];2:55–64. http://ovidsp.ovid.com/ovidweb.cgi?T=JS&CSC=Y&NEWS=N&PAGE=fulltext&D=med4&AN=14619274%5Cnhttp://sfxeu08.hosted.exlibrisgroup.com/sgul?sid=OVID:medline&id=pmid:14619274&id=doi:&issn=1175-5652&isbn=&volume=2&issue=1&spage=55&pages=55-64&date=2003&title=Appl.

Louviere JJ, Lancsar E. Choice experiments in health: the good, the bad, the ugly and toward a brighter future. Heal Econ Policy Law [Internet]. 2009. [cited 2018 Jun 20];4:527–46. http://www.ncbi.nlm.nih.gov/pubmed/19715635.

Lancsar E, Swait J. Reconceptualising the external validity of discrete choice experiments. Pharmacoeconomics [Internet]. Springer International Publishing; 2014. [cited 2018 Jun 26];32:951–65. http://link.springer.com/10.1007/s40273-014-0181-7.

Bridges JFP, Hauber AB, Marshall D, Lloyd A, Prosser LA, Regier DA, et al. Conjoint analysis applications in health—a checklist: a report of the ISPOR Good Research Practices for Conjoint Analysis Task Force. Value Heal [Internet]. 2011. [cited 2018 Jun 23];14:403–13. https://www.ispor.org/TaskForces/documents/ISPOR-CA-in-Health-TF-Report-Checklist.pdf.

Johnson FR, Lancsar E, Marshall D, Kilambi V, Mühlbacher A, Regier DA, et al. Constructing experimental designs for discrete-choice experiments: report of the ISPOR Conjoint Analysis Experimental Design Good Research Practices Task Force. Value Heal [Internet]. 2013. [cited 2018 Jun 23];16:3–13. https://www.ispor.org/ValueInHealth/ShowValueInHealth.aspx?issue=3551E3F3-83A8-4895-9415-5272AF29A9DF.

Lancsar E, Louviere J. Conducting discrete choice experiments to inform health care decision-making: a user’s guide, experiments to inform healthcare a user’s guide. Pharmacoeconomics [Internet]. 2008. [cited 2018 Jul 13];26:661–77. https://link.springer.com/content/pdf/10.2165%2F00019053-200826080-00004.pdf.

Hauber AB, González JM, Groothuis-Oudshoorn CGM, Prior T, Marshall DA, Cunningham C, et al. Statistical methods for the analysis of discrete choice experiments: a report of the ISPOR Conjoint Analysis Good Research Practices Task Force. Value Heal [Internet]. 2016. [cited 2018 Jun 23];19:300–15. https://www.ispor.org/Conjoint-Analysis-Statistical-Methods-Guidelines.pdf.

Louviere JJ, Islam T, Wasi N, Street D, Burgess L. Designing discrete choice experiments: do optimal designs come at a price? J Consum Res [Internet]. The University of Chicago Press. 2008. [cited 2018 Jun 20];35:360–75. https://academic.oup.com/jcr/article-lookup/doi/10.1086/586913.

Vass CM, Payne K. Using discrete choice experiments to inform the benefit-risk assessment of medicines: are we ready yet? Pharmacoeconomics [Internet]. 2017. [cited 2018 Jul 8];35:859–66. http://www.ncbi.nlm.nih.gov/pubmed/28536955.

Mott DJ. Incorporating quantitative patient preference data into healthcare decision-making processes: is HTA falling behind? Patient [Internet]. Springer International Publishing. 2018. [cited 2018 Jul 8];11:249–52. http://link.springer.com/10.1007/s40271-018-0305-9.

Mulhern B, Norman R, Street D, Viney R. One method, many methodological choices: a structured review of discrete-choice experiments for health state valuation. PharmacoEconomics. 2018;2018:1–15.

Quaife M, Terris-Prestholt F, Di Tanna GL, Vickerman P. How well do discrete choice experiments predict health choices? A systematic review and meta-analysis of external validity. Eur J Health Econ. 2018;2018:1–14.

Marshall D, Bridges JF, Hauber B, Cameron R, Donnalley L, Fyie K, et al. Conjoint analysis applications in health—how are studies being designed and reported? Patient Patient-Cent Outcomes Res. 2010;3(4):249–56.

Kleij KS, Tangermann U, Amelung VE, Krauth C. Patients’ preferences for primary health care–a systematic literature review of discrete choice experiments. BMC Health Serv Res. 2017;17(1):476.

Clark MD, Szczepura A, Gumber A, Howard K, Moro D, Morton RL. Measuring trade-offs in nephrology: a systematic review of discrete choice experiments and conjoint analysis studies. Nephrol Dial Transpl. 2017;33(2):348–55.

Michaels-Igbokwe C, MacDonald S, Currie GR. Individual preferences for child and adolescent vaccine attributes: a systematic review of the stated preference literature. Patient Patient-Cent Outcomes Res. 2017;10(6):687–700.

Regmi K, Kaphle D, Timilsina S, Tuha NAA. Application of discrete-choice experiment methods in tobacco control: a systematic review. PharmacoEconomics-Open. 2018;2018:1–13.

Whitty JA, Gonçalves ASO. A systematic review comparing the acceptability, validity and concordance of discrete choice experiments and best–worst scaling for eliciting preferences in healthcare. Patient Patient-Cent Outcomes Res. 2017;2017:1–17.

Harrison M, Milbers K, Hudson M, Bansback N. Do patients and health care providers have discordant preferences about which aspects of treatments matter most? Evidence from a systematic review of discrete choice experiments. BMJ Open. 2017;7(5):e014719.

Wright SJ, Vass CM, Sim G, Burton M, Fiebig DG, Payne K. Accounting for scale heterogeneity in healthcare-related discrete choice experiments when comparing stated preferences: a systematic review. Patient Patient-Cent Outcomes Res. 2018;2018:1–14.

Bien DR, Danner M, Vennedey V, Civello D, Evers SM, Hiligsmann M. Patients’ preferences for outcome, process and cost attributes in cancer treatment: a systematic review of discrete choice experiments. Patient Patient-Cent Outcomes Res. 2017;10(5):553–65.

Vass C, Gray E, Payne K. Discrete choice experiments of pharmacy services: a systematic review. Int J Clin Pharm. 2016;38(3):620–30.

Mansfield C, Tangka FK, Ekwueme DU, Smith JL, Guy GP Jr, Li C, et al. Peer reviewed: stated preference for cancer screening: a systematic review of the literature, 1990–2013. Prevent Chronic Dis. 2016;2016:13.

Schatz NK, Fabiano GA, Cunningham CE, Waschbusch DA, Jerome S, Lupas K, Morris KL. Systematic review of patients’ and parents’ preferences for ADHD treatment options and processes of care. Patient Patient-Cent Outcomes Res. 2015;8(6):483–97.

Vass C, Rigby D, Payne K. The role of qualitative research methods in discrete choice experiments: a systematic review and survey of authors. Med Decis Mak. 2017;37(3):298–313.

Mandeville KL, Lagarde M, Hanson K. The use of discrete choice experiments to inform health workforce policy: a systematic review. BMC Health Serv Res. 2014;14(1):367.

Hiligsmann M, Bours SP, Boonen A. A review of patient preferences for osteoporosis drug treatment. Curr Rheumatol Rep. 2015;17(9):61.

Webb EJ, Meads D, Eskyte I, King N, Dracup N, Chataway J, et al. A systematic review of discrete-choice experiments and conjoint analysis studies in people with multiple sclerosis. Patient Patient-Cent Outcomes Res. 2018;2018:1–12.

Janssen EM, Marshall DA, Hauber AB, Bridges JFP. Improving the quality of discrete-choice experiments in health: how can we assess validity and reliability? Expert Rev Pharmacoecon Outcomes Res [Internet]. 2017. [cited 2018 Jul 25];17:531–42. http://www.ncbi.nlm.nih.gov/pubmed/29058478.

Zhou M, Thayer WM, Bridges JF. Using latent class analysis to model preference heterogeneity in health: a systematic review. PharmacoEconomics. 2018;2018:1.

Mulhern B, Norman R, Street D, Viney R. One method, many methodological choices: a structured review of discrete-choice experiments for health state valuation. PharmacoEconomics. 2018;2018:1–15.

Flynn TN, Louviere JJ, Peters TJ, Coast J. Best-worst scaling: what it can do for health care research and how to do it. J Health Econ [Internet]. 2007. [cited 2018 Jun 22];26:171–89. http://www.ncbi.nlm.nih.gov/pubmed/16707175.

Augustovski F, Beratarrechea A, Irazola V, Rubinstein F, Tesolin P, Gonzalez J, et al. Patient preferences for biologic agents in rheumatoid arthritis: a discrete-choice experiment. Value Health. 2013;16:385–93.

Boone D, Mallett S, Zhu S, Yao GL, Bell N, Ghanouni A, et al. Patients’ & healthcare professionals’ values regarding true- & false-positive diagnosis when colorectal cancer screening by CT colonography: discrete choice experiment. PLoS One. 2013;2013:8.

Carroll FE, Al-Janabi H, Flynn T, Montgomery AA. Women and their partners’ preferences for Down’s syndrome screening tests: a discrete choice experiment. Prenat Diagn. 2013;33:449–56.

Deal K, Marshall D, Dabrowski D, Charter A, Bukoski M, Moayyedi P. Assessing the value of symptom relief for patients with gastroesophageal reflux disease treatment: willingness to pay using a discrete choice experiment. Value Heal [Internet]. 2013;16:588–98. https://doi.org/10.1016/j.jval.2013.01.007 (Elsevier).

De Bekker-Grob EW, Bliemer MCJ, Donkers B, Essink-Bot ML, Korfage IJ, Roobol MJ, et al. Patients’ and urologists’ preferences for prostate cancer treatment: a discrete choice experiment. Br J Cancer [Internet]. 2013;109:633–40. https://doi.org/10.1038/bjc.2013.370 (Nature Publishing Group).

Gelhorn HL, Stringer SM, Brooks A, Thompson C, Monz BU, Boye KS, et al. Preferences for medication attributes among patients with type 2 diabetes mellitus in the UK. Diabetes Obes Metab. 2013;15:802–9.

Glenngård AH, Hjelmgren J, Thomsen PH, Tvedten T. Patient preferences and willingness-to-pay for ADHD treatment with stimulants using discrete choice experiment (DCE) in Sweden, Denmark and Norway. Nord J Psychiatry. 2013;67:351–9.

Gonzalez JM, Johnson FR, Runken MC, Poulos CM. Evaluating migraineurs’ preferences for migraine treatment outcomes using a choice experiment. Headache. 2013;53:1635–50.

Hauber AB, Arden NK, Mohamed AF, Johnson FR, Peloso PM, Watson DJ, et al. A discrete-choice experiment of United Kingdom patients’ willingness to risk adverse events for improved function and pain control in osteoarthritis. Osteoarthr Cartil [Internet]. 2013;21:289–97. https://doi.org/10.1016/j.joca.2012.11.007 (Elsevier Ltd).

Krucien N, Gafni A, Fleury B, Pelletier-Fleury N. Patients’ with obstructive sleep apnoea syndrome (OSAS) preferences and demand for treatment: a discrete choice experiment. Thorax. 2013;68:487–8.

Laba TL, Brien JA, Fransen M, Jan S. Patient preferences for adherence to treatment for osteoarthritis: the MEdication Decisions in Osteoarthritis Study (MEDOS). BMC Musculoskelet Disord. 2013;14.

Lathia N, Isogai PK, Walker SE, De Angelis C, Cheung MC, Hoch JS, et al. Eliciting patients’ preferences for outpatient treatment of febrile neutropenia: a discrete choice experiment. Support Care Cancer. 2013;21:245–51.

Meghani SH, Chittams J, Hanlon AL, Curry J. Measuring preferences for analgesic treatment for cancer pain: how do African-Americans and Whites perform on choice-based conjoint (CBC) analysis experiments? BMC Med Inform Decis Mak [Internet]. BMC Medical Informatics and Decision-making. 2013. 13:1. Available from: BMC Medical Informatics and Decision-making

Milte R, Ratcliffe J, Miller M, Whitehead C, Cameron ID, Crotty M. What are frail older people prepared to endure to achieve improved mobility following hip fracture? A discrete choice experiment. J Rehabil Med. 2013;45:81–6.

Mohamed AF, Zhang J, Johnson FR, Lomon ID, Malvolti E, Townsend R, et al. Avoidance of weight gain is important for oral type 2 diabetes treatments in Sweden and Germany: patient preferences. Diabetes Metab [Internet]. 2013;39:397–403. https://doi.org/10.1016/j.diabet.2013.06.001 (Elsevier Masson SAS).

Moia M, Mantovani LG, Carpenedo M, Scalone L, Monzini MS, Cesana G, et al. Patient preferences and willingness to pay for different options of anticoagulant therapy. Intern Emerg Med. 2013;8:237–43.

Najafzadeh M, Johnston KM, Peacock SJ, Connors JM, Marra MA, Lynd LD, et al. Genomic testing to determine drug response: measuring preferences of the public and patients using discrete choice experiment (DCE). BMC Health Serv Res. 2013;2013:13.

Parkinson B, Goodall S, Norman R. Measuring the loss of consumer choice in mandatory health programmes using discrete choice experiments. Appl Health Econ Health Policy. 2013;11:139–50.

Pisa G, Freytag S, Schandry R. Chronic obstructive pulmonary disease (COPD) patients’ disease-related preferences: a study using conjoint analysis. Patient. 2013;6:93–101.

Schaarschmidt ML, Umar N, Schmieder A, Terris DD, Goebeler M, Goerdt S, et al. Patient preferences for psoriasis treatments: impact of treatment experience. J Eur Acad Dermatol Venereol. 2013;27:187–98.

Veldwijk J, Lambooij M, van Gils P, Struijs J, Smit H, de Wit GA. Type 2 diabetes patients’ preferences and willingness to pay for lifestyle programs: a discrete choice experiment. BMC Public Health [Internet]. 2013;13:1099. http://ovidsp.ovid.com/ovidweb.cgi?T=JS&PAGE=reference&D=emed11&NEWS=N&AN=24289831. Accessed 16 May 2018.

Whitty JA, Stewart S, Carrington MJ, Calderone A, Marwick T, Horowitz JD, et al. Patient preferences and willingness-to-pay for a home or clinic based program of chronic heart failure management: findings from the Which? trial. PLoS One. 2013;8:1–8.

Zimmermann TM, Clouth J, Elosge M, Heurich M, Schneider E, Wilhelm S, et al. Patient preferences for outcomes of depression treatment in Germany: a choice-based conjoint analysis study. J Affect Disord [Internet]. 2013;148:210–9. https://doi.org/10.1016/j.jad.2012.11.062 (Elsevier).

Cameron MP, Newman PA, Roungprakhon S, Scarpa R. The marginal willingness-to-pay for attributes of a hypothetical HIV vaccine. Vaccine [Internet]. 2013;31:3712–7. https://doi.org/10.1016/j.vaccine.2013.05.089 (Elsevier Ltd).

Tokes K, Quadri S, Cahill P, Chiu G, Ivanov A, Tang H. Disease and treatment perceptions among Asian Americans diagnosed with chronic hepatitis B infection. J Gen Intern Med. 2014;29:477–84.

Dixon S, Nancarrow SA, Enderby PM, Moran AM, Parker SG. Assessing patient preferences for the delivery of different community-based models of care using a discrete choice experiment. Health Expect. 2013;2013:1204–14.

Benning TM, Dellaert BGC, Severens JL, Dirksen CD. The effect of presenting information about invasive follow-up testing on individuals’ noninvasive colorectal cancer screening participation decision: results from a discrete choice experiment. Value Heal [Internet]. 2014;17:578–87. https://doi.org/10.1016/j.jval.2014.04.007 (Elsevier).

Bessen T, Chen G, Street J, Eliott J, Karnon J, Keefe D, et al. What sort of follow-up services would Australian breast cancer survivors prefer if we could no longer offer long-term specialist-based care? A discrete choice experiment. Br J Cancer [Internet]. 2014;110:859–67. https://doi.org/10.1038/bjc.2013.800 (Nature Publishing Group).

Bewtra M, Kilambi V, Fairchild AO, Siegel CA, Lewis JD, Johnson FR. Patient preferences for surgical versus medical therapy for ulcerative colitis. Inflamm Bowel Dis [Internet]. 2014. [cited 2018 Jun 19];20:103–14. http://www.ncbi.nlm.nih.gov/pubmed/24280881.

Fraenkel L, Suter L, Cunningham CE, Hawker G. Understanding preferences for disease-modifying drugs in osteoarthritis. Arthritis Care Res (Hoboken) [Internet]. 2014. [cited 2018 Jun 19];66:1186–92. http://www.ncbi.nlm.nih.gov/pubmed/24470354.

Goossens LMA, Utens CMA, Smeenk FWJM, Donkers B, Van Schayck OCP, Rutten-Van Mölken MPMH. Should I stay or should I go home? A latent class analysis of a discrete choice experiment on hospital-at-home. Value Heal [Internet]. 2014;17:588–96. https://doi.org/10.1016/j.jval.2014.05.004 (Elsevier).

Hauber AB, Arellano J, Qian Y, González JM, Posner JD, Mohamed AF, et al. Patient preferences for treatments to delay bone metastases. Prostate. 2014;74:1488–97.

Havrilesky LJ, Secord AA, Ehrisman JA, Berchuck A, Valea FA, Lee PS, et al. Patient preferences in advanced or recurrent ovarian cancer. Cancer. 2014;120:3651–9.

Howard K, Gerard K, Adelson P, Bryce R, Wilkinson C, Turnbull D. Women’s preferences for inpatient and outpatient priming for labour induction: a discrete choice experiment. BMC Health Serv Res. 2014;14:1–11.

Johnson P, Bancroft T, Barron R, Legg J, Li X, Watson H, et al. Discrete choice experiment to estimate breast cancer patients’ preferences and willingness to pay for prophylactic granulocyte colony-stimulating factors. Value Health [Internet]. 2014;17:380–9. https://doi.org/10.1016/j.jval.2014.01.002 (Elsevier).

Kawata AK, Kleinman L, Harding G, Ramachandran S. Evaluation of patient preference and willingness to pay for attributes of maintenance medication for chronic obstructive pulmonary disease (COPD). Patient—Patient-Centered Outcomes Res [Internet]. 2014. 7:413–26. https://doi.org/10.1007/s40271-014-0064-1.

Kløjgaard ME, Manniche C, Pedersen LB, Bech M, Søgaard R. Patient preferences for treatment of low back pain—a discrete choice experiment. Value Heal [Internet]. 2014;17:390–6. https://doi.org/10.1016/j.jval.2014.01.005.

Nafees B, Setyawan J, Lloyd A, Ali S, Hearn S, Sasane R, et al. Parent preferences regarding stimulant therapies for ADHD: a comparison across six European countries. Eur Child Adolesc Psychiatry. 2014;23:1189–200.

Ostermann J, Njau B, Brown DS, Mühlbacher A, Thielman N. Heterogeneous HIV testing preferences in an urban setting in tanzania: results from a discrete choice experiment. PLoS One. 2014;2014:9.

Poulos C, Hauber AB, González JM, Turpcu A. Patients’ willingness to trade off between the duration and frequency of rheumatoid arthritis treatments. Arthritis Care Res. 2014;66:1008–15.

Smith ML, White CB, Railey E, Sledge GW. Examining and predicting drug preferences of patients with metastatic breast cancer: using conjoint analysis to examine attributes of paclitaxel and capecitabine. Breast Cancer Res Treat. 2014;145:83–9.

Torbica A, Fattore G, Ayala F. Eliciting preferences to inform patient-centred policies: the case of psoriasis. Pharmacoeconomics. 2014;32:209–23.

Van Den Wijngaard L, Van Wely M, Dancet EAF, Van Mello NM, Koks CAM, Van Der Veen F, et al. Patients’ preferences for gonadotrophin-releasing hormone analogs in in vitro fertilization. Gynecol Obstet Invest. 2014;78:16–21.

Van Haaren-ten Haken T, Pavlova M, Hendrix M, Nieuwenhuijze M, de Vries R, Nijhuis J. Eliciting preferences for key attributes of intrapartum care in the Netherlands. Birth. 2014;41:185–94.

Wijnen BFM, de Kinderen RJA, Colon AJ, Dirksen CD, Essers BAB, Hiligsmann M, et al. Eliciting patients’ preferences for epilepsy diagnostics: a discrete choice experiment. Epilepsy Behav [Internet]. 2014;31:102–9. https://doi.org/10.1016/j.yebeh.2013.11.029 (Elsevier Inc.).

Gerard K, Tinelli M, Latter S, Smith A, Blenkinsopp A. Patients’ valuation of the prescribing nurse in primary care: a discrete choice experiment. Health Expect. 2015;18:2223–35.

Krucien N, Le M, Ms V, Pelletier-fleury N. What are the patients’ preferences for the chronic care model? An application to the obstructive sleep apnoea syndrome. Health Expect. 2014;2014:2536–48.

Bewtra M, Fairchild AO, Gilroy E, Leiman DA, Kerner C, Johnson FR, et al. Inflammatory bowel disease patients’ willingness to accept medication risk to avoid future disease relapse. Am J Gastroenterol [Internet]. 2015;110:1675–81. https://doi.org/10.1038/ajg.2015.321 (Nature Publishing Group).

De Vries ST, De Vries FM, Dekker T, Haaijer-ruskamp FM, De Vries ST, De Vries FM, et al. The role of patients’ age on their preferences for choosing additional blood pressure-lowering drugs: a discrete choice experiment in patients with diabetes. PLoS One [Internet]. 2015;10:1–15. https://doi.org/10.1371/journal.pone.0139755.

Bottger B, Thate-Waschke I-M, Bauersachs R, Kohlmann T, Wilke T. Preferences for anticoagulation therapy in atrial fibrillation: the patients’ view. J Thromb Thrombol [Internet]. 2015;40:406–15. https://doi.org/10.1007/s11239-015-1263-x.

De Bekker-Grob EW, Niers EJ, Van Lanschot JJB, Steyerberg EW, Wijnhoven BPL, Eva EWDB, et al. Patients’ preferences for surgical management of esophageal cancer: a discrete choice experiment. World J Surg. 2015;39:2492–9 (Springer International Publishing).

Howard K, Mph HI, Mhealthec M, Bbus GPS, Mph MPP. Men’s preferences and trade-offs for prostate cancer screening: a discrete choice experiment. 2014;3123–35.

Beulen L, Grutters JPC, Faas BHW, Feenstra I, Groenewoud H, van Vugt JMG, et al. Women’s and healthcare professionals’ preferences for prenatal testing: a discrete choice experiment. Prenat Diagn. 2015;35:549–57.

Böttger B, Thate-Waschke I-M, Bauersachs R, Kohlmann T, Wilke T. Preferences for anticoagulation therapy in atrial fibrillation. J Thromb Thrombol. 2015;40:406–15.

Brown P, Panattoni L, Cameron L, Knox S, Ashton T, Tenbensel T, et al. Hospital sector choice and support for public hospital care in New Zealand: results from a labeled discrete choice survey. J Health Econ [Internet]. 2015;43:118–27. https://doi.org/10.1016/j.jhealeco.2015.06.004 (Elsevier B.V.).

Chamot E, Mulambia C, Kapambwe S, Shrestha S, Parham GP, Macwan’gi M, et al. Preference for human papillomavirus-based cervical cancer screening: results of a choice-based conjoint study in Zambia. J Low Genit Tract Dis. 2015;19:119–23.

Chen L-C, Cheng L-J, Zhang Y, He X, Knaggs RD. Acupuncture or low frequency infrared treatment for low back pain in Chinese patients: a discrete choice experiment. PLoS One [Internet]. 2015;10:e0126912. https://doi.org/10.1371/journal.pone.0126912.

Coxon D, Frisher M, Jinks C, Jordan K, Paskins Z, Peat G. The relative importance of perceived doctor’s attitude on the decision to consult for symptomatic osteoarthritis: a choice-based conjoint analysis study. BMJ Open. 2015;5:1–10.

Danyliv A, Pavlova M, Gryga I, Groot W. Preferences for physician services in Ukraine: a discrete choice experiment. BMJ Open. 2015;30:346–65.

Harris P, Whitty JA, Kendall E, Ratcliffe J, Wilson A, Littlejohns P, et al. The Australian public’s preferences for emergency care alternatives and the influence of the presenting context: a discrete choice experiment. 2015;1–12.

Laba T, Howard K, Rose J, Peiris D, Redfern J, Usherwood T, et al. Patient preferences for a polypill for the prevention of cardiovascular diseases. 2015.

Larson E, Vail D, Mbaruku GM, Kimweri A, Freedman LP, Kruk ME. Moving toward patient-centered care in Africa: a discrete choice experiment of preferences for delivery care among 3,003 Tanzanian women. 2015;1–12.

Mcateer A, Yi D, Watson V, Norwood P, Ryan M, Hannaford PC, et al. Exploring preferences for symptom management in primary care. 2015;478–88.

Berhane A, Enquselassie F. Patients’ preferences for attributes related to health care services at hospitals in Amhara Region, northern Ethiopia: a discrete choice experiment. Patient Prefer Adherence [Internet]. 2015;9:1293–301. http://www.proxy.its.virginia.edu/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=a9h&AN=110257669&site=ehost-live&scope=site

Michaels-igbokwe C, Lagarde M, Cairns J, Initiative I, Terris-prestholt F. Designing a package of sexual and reproductive health and HIV outreach services to meet the heterogeneous preferences of young people in Malawi: results from a discrete choice experiment. 2015;15–7.

Ostermann J, Njau B, Mtuy T, Brown DS, Mühlbacher A, Thielman N. One size does not fit all: HIV testing preferences differ among high-risk groups in Northern Tanzania. AIDS Care - Psychol Socio-Medical Asp AIDS/HIV [Internet]. 2015. [cited 2018 Jun 19];27:595–603. http://www.ncbi.nlm.nih.gov/pubmed/25616562.

Salloum RG, Maziak W, Hammond D, Nakkash R, Islam F, Cheng X, et al. Eliciting preferences for waterpipe tobacco smoking using a discrete choice experiment: implications for product regulation. BMJ Open. 2015;1–8.

Sawamura K, Sano H, Rn MN. Japanese public long-term care insured: preferences for future long-term care facilities, including relocation, waiting times, and individualized care. JMDA [Internet]. 2015;16:350.e9–20. https://doi.org/10.1016/j.jamda.2015.01.082 (Elsevier).

Brinovec RP, Warren E, Wittgens K, Dickmann P. Decision-making criteria among European patients: exploring patient preferences for primary care services. 2018;25:3–9.

Filby A, Smith AB, Carr LM. Consumer preferences for scanning modality to diagnose focal liver lesions. Int J Technol Assess Health Care. 2015;2:27–35.

Whitty JA, Kendall E, Sav A, et al. Preferences for the delivery of community pharmacy services to help manage chronic conditions. Res Soc Adm Pharm [Internet]. 2015;11:197–215. https://doi.org/10.1016/j.sapharm.2014.06.007 (Elsevier Inc).

Zickafoose JS, DeCamp LR, Prosser LA. Parents’ preferences for enhanced access in the pediatric medical home: a discrete choice experiment. JAMA Pediatr [Internet]. NIH Public Access. 2015. [cited 2018 Jun 19];169:358–64. http://www.ncbi.nlm.nih.gov/pubmed/25643000.

Hauber AB, Nguyen H, Posner J, Kalsekar I, Ruggles J. A discrete-choice experiment to quantify patient preferences for frequency of glucagon-like peptide-1 receptor agonist injections in the treatment of type 2 diabetes. 2016;7995.

Spinks J, Janda M, Soyer HP, Whitty JA. Consumer preferences for teledermoscopy screening to detect melanoma early. J Telemed Telecare. 2016;22:39–46.

Ramirez M, Wu S, Beale E. Designing a text messaging intervention to improve physical activity behavior among low-income Latino patients with diabetes. Los Angeles, 2014–2015. Prev Chronic Dis. 2016;13:160035. https://doi.org/10.5888/pcd13.160035.

Murchie P, Norwood PF, Pietrucin-materek M, Porteous T, Hannaford PC, Ryan M. Determining cancer survivors’ preferences to inform new models of follow-up care. Nature Publishing Group; 2016;115:1495–503. http://dx.doi.org/10.1038/bjc.2016.352.

Tang C, Xu J, Zhang M. The choice and preference for public- private health care among urban residents in China: evidence from a discrete choice experiment. BMC Health Serv Res [Internet]. BMC Health Services Research; 2016;1–11. http://dx.doi.org/10.1186/s12913-016-1829-0.

Dong D, Ozdemir S, Bee YM, Toh S, Bilger M, Finkelstein E. Measuring high-risk patients’ preferences for pharmacogenetic testing to reduce severe adverse drug reaction: a discrete choice experiment. Value Heal [Internet]. Elsevier; 2016;19:767–75. http://dx.doi.org/10.1016/j.jval.2016.03.1837.

Abiiro GA, Torbica A, Kwalamasa K. What factors drive heterogeneity of preferences for micro-health insurance in rural Malawi? 2018;1172–83.

Minh H Van, Chung LH, Giang KB, Duc DM, Hinh ND, Mai VQ, et al. Potential impact of graphic health warnings on cigarette packages in reducing cigarette demand and smoking-related deaths in Vietnam. 2016;17:85–90.

Porteous T, Ryan M, Bond C, Watson M, Watson V. Managing minor ailments; the public’ s preferences for attributes of community pharmacies. A discrete choice experiment. 2016;1–15.

Veldwijk J, Essers BAB, Lambooij MS, Dirksen CD, Smit HA, Wit GA De. Survival or mortality: does risk attribute framing influence decision-making behavior in a discrete choice experiment? Value Heal [Internet]. Elsevier; 2016;19:202–9. http://dx.doi.org/10.1016/j.jval.2015.11.004.

Wong SF, Sci BM, Norman R, Dunning TL, Ashley DM, Collins I, et al. A discrete choice experiment to examine the preferences of patients with cancer and their willingness to pay for different types of health care appointments. J Natl Compr Cancer Netw. 2016;14:311–9.

Ozawa S, Grewal S, Bridges JFP. Household size and the decision to purchase health insurance in Cambodia: results of a discrete-choice experiment with scale adjustment. Appl Health Econ Health Policy. Springer International Publishing; 2016;14:195–204.

Czoli CD, Goniewicz M, Islam T, Kotnowski K, Hammond D. Consumer preferences for electronic cigarettes: results from a discrete choice experiment. 2016;30–7.

Veldwijk J, Lambooij MS, Kallenberg FGJ, Kranen HJ Van, Bredenoord AL, Dekker E, et al. Preferences for genetic testing for colorectal cancer within a population-based screening program: a discrete choice experiment. 2016;1:361–6.

Kotnowski K, Fong GT, Gallopel-morvan K, Islam T, Hammond D. The impact of cigarette packaging design among young females in Canada: findings from a discrete choice experiment. 2018;1348–56.

Kan HJ, de Bekker-Grob EW, Van Marion ES, Van Oijen GW, van Nieuwenhoven CA, Zhou C, et al. Patients’ preferences for treatment for dupuytren’s disease: a discrete choice experiment. Plastic Reconstr Surg. 2016;137(1):165–73. https://doi.org/10.1097/prs.0000000000001878.

Marshall HS, Chen G, Clarke M, Ratcliffe J. Adolescent, parent and societal preferences and willingness to pay for meningococcal B vaccine: a discrete choice experiment. Vaccine [Internet]. Elsevier Ltd; 2016;34:671–7. http://dx.doi.org/10.1016/j.vaccine.2015.11.075.

Wang B, Chen G, Ratcliffe J, Haji H, Afzali A, Giles L, et al. Adolescent values for immunisation programs in Australia: a discrete choice experiment. 2017;28:1–14. http://dx.doi.org/10.1371/journal.pone.0181073.

Determann D, Lambooij MS, Steyerberg EW, Bekker-grob EW De, Wit GA De. Impact of survey administration mode on the results of a health-related discrete choice experiment: online and paper comparison. Value Heal [Internet]. Elsevier Inc.; 2017;20:953–60. http://dx.doi.org/10.1016/j.jval.2017.02.007.

Wright SJ, Ulph F, Dharni N. Eliciting preferences for information provision in newborn bloodspot screening programs. Value Health [Internet]. Elsevier Inc.; 2017;20:651–61. http://dx.doi.org/10.1016/j.jval.2016.11.007.

Carlin CS, Higuera L, Anderson S. Improving patient-centered care by assessing patient preferences for multiple sclerosis disease-modifying agents: a stated-choice experiment. 2017;1–7.

Eliasson L, Freitas HM De, Dearden L, Calimlim B, Lloyd AJ. Patients’ preferences for the treatment of metastatic castrate-resistant prostate cancer: a discrete choice experiment. Clin Ther [Internet]. Elsevier HS Journals, Inc.; 2017;39:723–37. http://dx.doi.org/10.1016/j.clinthera.2017.02.009.

Marshall DA, Gonzalez JM, Macdonald K V, Johnson FR. Estimating preferences for complex health technologies: lessons learned and implications for personalized medicine. Value Heal [Internet]. Elsevier Inc.; 2017;20:32–9. http://dx.doi.org/10.1016/j.jval.2016.08.737.

Cranen K, Groothuis-Oudshoorn CG, Vollenbroek-Hutten MM, IJzerman MJ. Toward patient-centered telerehabilitation design: understanding chronic pain patients’ preferences for web-based exercise telerehabilitation using a discrete choice experiment. J Med Internet Res [Internet]. JMIR Publications Inc. 2017. [cited 2018 Jun 19];19:e26. http://www.ncbi.nlm.nih.gov/pubmed/28108429.

Determann D, Lambooij MS, Gyrd-hansen D, Bekker-grob EW De, Steyerberg EW, Heldoorn M, et al. Personal health records in the Netherlands: potential user preferences quantified by a discrete choice experiment. 2017;24:529–36.

Flood EM, Bell KF, Cruz MC De, Ginchereau FM. Patient preferences for diabetes treatment attributes and drug classes. 2017;7995.

Deen WK Van, Nguyen D, Duran NE. Value redefined for inflammatory bowel disease patients: a choice-based conjoint analysis of patients’ preferences. Springer International Publishing; 2017;455–65.

Miners AH, Llewellyn CD, Cooper VL, Youssef E, Pollard AJ, Lagarde M, et al. A discrete choice experiment to assess people living with HIV’s (PLWHIV’s) preferences for GP or HIV clinic appointments. 2016;1–7.

Hauber AB, Mohamed AF, Gonzalez JM, Fairchild O, Zelt SC, Graff O, et al. Benefit-risk tradeoff preferences for chronic hand eczema treatments. 2017;6634.

Mühlbacher AC, Bridges JFP, Bethge S, Dintsios C-M, Schwalm A, Gerber-Grote A, et al. Preferences for antiviral therapy of chronic hepatitis C: a discrete choice experiment. Eur J Heal Econ [Internet]. 2017;18:155–65. https://doi.org/10.1007/s10198-016-0763-8.

Marshall T, Pugh A, Fairchild A, Hass S. Patient Preferences for device-aided treatments indicated for advanced Parkinson disease. Value Heal [Internet]. 2017;20:1383–93. https://doi.org/10.1016/j.jval.2017.06.001 (Elsevier Inc.).

Seanehia J, Treibich C, Holmberg C, Müller-Nordhorn J, Casin V, Raude J, et al. Quantifying population preferences around vaccination against severe but rare diseases: a conjoint analysis among French university students, 2016. Vaccine [Internet]. 2017 [cited 2018 Jul 12];35:2676–84. http://www.ncbi.nlm.nih.gov/pubmed/28408120.

Zipursky RB, Cunningham CE, Stewart B, Rimas H, Cole E, Vaz SM. Characterizing outcome preferences in patients with psychotic disorders: a discrete choice conjoint experiment. Schizophr Res [Internet]. 2017 [cited 2018 Jul 12];185:107–13. http://www.ncbi.nlm.nih.gov/pubmed/28038919.

Alayli-Goebbels AFG, Dellaert BGC, Knox SA, Ament AJHA, Lakerveld J, Bot SDM, et al. Consumer preferences for health and nonhealth outcomes of health promotion: results from a discrete choice experiment. Value Health. 2013;16:114–23.

Bailey H. Results from a preliminary study to develop the quality adjustments for quality adjusted life year values for Trinidad and Tobago. West Indian Med J. 2013;62:543–7.

Gu NY, Botteman MF, Gerber RA, Ji X, Postema R, Wan Y, et al. Eliciting health state utilities for Dupuytren’s contracture using a discrete choice experiment. Acta Orthop. 2013;84:571–8.

Norman R, Cronin P, Viney R. A pilot discrete choice experiment to explore preferences for EQ-5D-5L health states. Appl Health Econ Health Policy. 2013;11:287–98.

Prosser LA, Payne K, Rusinak D, Shi P, Messonnier M. Using a discrete choice experiment to elicit time trade-off and willingness-to-pay amounts for influenza health-related quality of life at different ages. Pharmacoeconomics. 2013;31:305–15.

Howard K, Salkeld GP, Patel MI, Mann GJ, Pignone MP. Men’s preferences and trade-offs for prostate cancer screening: a discrete choice experiment. Health Expect. 2015;18:3123–35.

Notten KJB, Essers BA, Weemhoff M, Rutten AGH, Donners J, Gestel I Van. Do patients prefer mesh or anterior colporrhaphy for primary correction of anterior vaginal wall prolapse: a labelled discrete choice experiment. 2015;873–80.

Wijngaard L Van Den, Rodijk ICM, Veen F Van Der, Erven MHWG, Koks CAM, Verhoeve HR, et al. Patient preference for a long-acting recombinant FSH product in ovarian hyperstimulation in IVF: a discrete choice experiment. 2015;30:331–7.

Kauf TL, Yang J, Kimball AB, Sundaram M, Okun M, Mulani P, et al. Psoriasis patients’ willingness to accept side-effect risks for improved treatment efficacy treatment efficacy. 2015;6634.

Miller FA, Hayeems RZ, Bombard Y, Cressman S, Barg C, Carroll JC, Wilson B, Little J, Allanson J, Chakraborty P, Giguere Y, Regier DA. Public perceptions of the benefits and risks of newborn screening. Pediatrics. 2015;136(2):e413–23.

Mohamed AF, González JM, Fairchild A. Patient benefit-risk tradeoffs for radioactive iodine-refractory differentiated thyroid cancer treatments. 2015;2015.

Feliciano R, Ponte C, Botella M, Lizán L. Patients’ and physicians’ preferences for type 2 diabetes mellitus treatments in Spain and Portugal: a discrete choice experiment. 2015;1443–58.

Christin J, Mu AC. Chronic pain patients’ treatment preferences: a discrete-choice experiment. 2015;613–28.

Ngorsuraches S, Nawanukool K, Petcharamanee K, Poopantrakool U. Parents’ preferences and willingness-to-pay for human papilloma virus vaccines in Thailand. J Pharm Policy Pract [Internet]. Journal of Pharmaceutical Policy and Practice; 2015. https://doi.org/10.1186/s40545-015-0040-8

Ngorsuraches S, Thongkeaw K. Patients’ preferences and willingness-to-pay for postmenopausal hormone receptor-positive, HER2-negative advanced breast cancer treatments after failure of standard treatments. Berlin: Springer International Publishing, Springerplus; 2015.

Regier DA, Peacock SJ, Pataky R, van der Hoek K, Jarvik G, Veenstra DA. Societal preferences for the return of incidental findings from clinical genomic sequencing: a discrete choice experiment. CMAJ. 2015;187(6):E190–7.

Ryan M. Gaining pounds by losing pounds: preferences for lifestyle interventions to reduce obesity. 2015;161–82.

Finkelstein E, Malhotra C, Chay J, Ozdemir S, Chopra A, Kanesvaran R. Impact of treatment subsidies and cash payouts on treatment choices at the end of life. Value Heal [Internet]. 2016;19:788–94. https://doi.org/10.1016/j.jval.2016.02.015 (Elsevier).